Using Ultrasound Imaging in Learning a Second Language

Purchased on Gettyimages. Copyright.

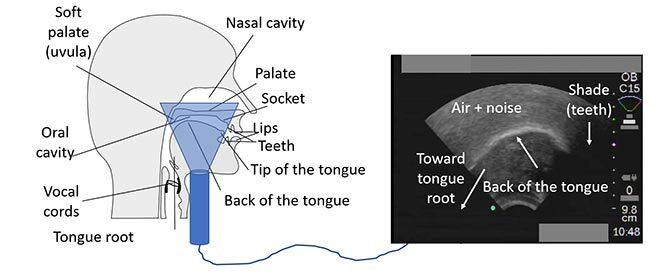

Learning a new language is never easy, but most of the time it’s part of the integration process for migrants. In addition to the many rules of grammar, syntax and vocabulary, people wishing to acquire a second language must learn to master or modify different articulatory patterns of the lips, jaw and tongue, in order to produce sounds that do not exist in their native language. Beginners may attempt to imitate lip and jaw movements as they try to perfect these new sounds, but the articulator that produces the most variation in the vocal tract remains invisible: the tongue. It is the articulator that is the hardest to conceptualize, visualize and master. Researchers at the Biomedical Information Processing Laboratory (LATIS) are aiming to alleviate this obstacle by using ultrasound imaging.

Ultrasound imaging to Observe Tongue Movements

The idea of using ultrasound images as biofeedback to perfect pronunciation is not new, and many in the speech therapy community believe in it. But for now, evidence of effectiveness is qualitative and anecdotal. Additionally, studies are mainly limited to pronunciation problems commonly encountered in children (e.g., the English “r”).

LATIS researchers will be studying tongue movements made to produce certain sounds by people learning English as a second language (ESL). The research team will also attempt to analyze changes in these movements as learning progresses, by following a cohort of learners on a longitudinal basis. Few mathematical tools and models currently exist to characterize this type of data, hence the particular challenges of this research.

Obstacles to Overcome

While, in principle, detecting and interpreting tongue gestures by ultrasound seems a straightforward task, there are a number of difficulties to be overcome. First, each vocal tract has a unique shape, and each speaker uses their own strategies to produce sounds. Indeed, articulation relies on a redundant system: several lip, tongue and jaw configurations can produce the same sound.

This is further complicated by a phenomenon known as coarticulation. Certain sounds are influenced by other sounds that precede or follow them. For example, when the vowel “oo” follows the consonant “b” (boo), the movement is initiated by the tongue before the sound is produced; this is different with an “oo” follows a “t” (too). It should also be noted that coarticulation of the same syllable varies from one language to another, and could be an especially difficult learning task for second-language learners.

Vocal tract ultrasound imaging—which has been used for several decades to study articulatory phonetics—is practical, safe and non-intrusive. However, image quality is highly variable from one subject to another, one practitioner to another, and one sound to another—the tip of the tongue may become invisible due to the presence of air when producing a “t” for example. This poses challenges regarding the automatic image analysis that will be needed to carry out the study, as well as their interpretation for biofeedback purposes by inexperienced observers.

To be Continued…

As a first step, software will be developed to enable the visualization of different, more or less sophisticated forms of ultrasound-based articulatory biofeedback during pronunciation exercises designed for learners of ESL. Several cohorts of ESL learners from different language groups will be recruited to evaluate different biofeedback approaches, and to study the evolution of learning new articulatory gestures over a period of several months. New mathematical models will be developed to characterize the evolution of articulatory gestures over the course of learning, and to establish the link between articulatory gestures and the acoustic characteristics of the sounds produced and their perception by listeners.

The experimental protocol will draw on knowledge and approaches from computer science, linguistics and language didactics. An interdisciplinary approach will be used to design new mathematical and computational tools to obtain linguistically interpretable experimental data. The project will draw on expertise not only in image analysis, but also in phonetics, phonology and language didactics, through collaborations with Professors Lucie Ménard of UQAM and Walcir Cardoso of Concordia University.